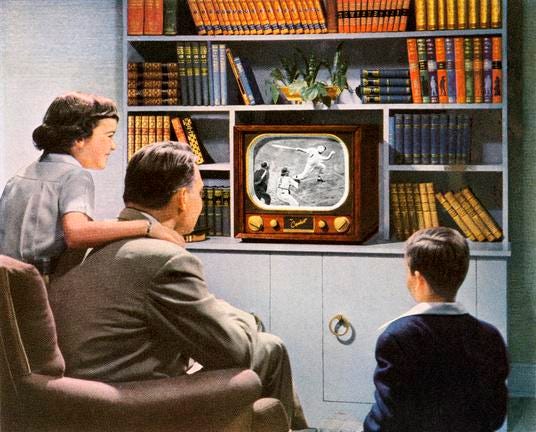

Getty Images

Measuring media has never been as precise as stakeholders would like. Adding CTV (connected TV) and ATV (advanced TV) to an already intricate mix compounds the complexities.

I recently asked Scott McDonald, president and CEO of the Advertising Research Foundation, to update us on efforts to improve the process.

Paul Talbot: Could you share a bit of historical perspective? How does where we stand today with efforts to develop robust audience measurements for CTV and OTT compare with where we were 70 years ago working to develop audience measurement estimates for broadcast TV?

Scott McDonald: 2021 vs 1951? What’s the same? The market wants an independent measurement of the reach of a TV ad campaign. Tthis measurement is a key ingredient in setting prices between buyers and sellers – which is why it’s called a ‘currency.’ But not much else is the same.

What’s different?

Back then there were only 3 networks, everything was broadcast free over the air and the most popular shows got over 50% share of viewing. Measurement was done by recruiting panels to fill out diaries reporting their viewing and the ads were purchased based on the viewing of the program.

Now the market is vastly bigger but incredibly fragmented across different platforms and devices, with programs and ads sometimes viewed in different apps, each with their own measurement challenges.

MORE FOR YOU

Most measurement is passive and direct (no diaries) but because each person watches on multiple platforms and devices, understanding the deduplicated reach of a campaign is a challenge.

And now part of the TV market is addressable (ads can be targeted based on household or geographic characteristics rather than program context). Also, now a significant chunk of the market watches subscription-based ad-free TV. One huge difference is that back in 1951, an independent company like Nielsen could measure the market without soliciting cooperation from the companies being measured.

That is less true now since many of the signals needed for measurement must be passed by multiple distributors to be read by the measurement systems, a situation that requires cooperation between the measured and the measurers.

Talbot: What needs to happen for all the different stakeholders to be provided with quality CTV and OTT audience data?

McDonald: Now that the networks all own the data for viewing on their platforms, they need to agree to share these data in measurement solutions. Part of this means that the signals that identify a unit of ad or program content need to be interoperable (standardized) so that they can be read and comprehended across the fragmented landscape.

Beyond this, to get to the deduplication, we need some privacy-centric way of figuring out how those different screens and platforms map back to persons or households.

If we can’t get this done in a manner that is consistent with privacy requirements, then one approach is to aggregate those persons and households up to big enough groupings to make them useful to advertisers but impossible to reverse-engineer back to tracking and individual targeting.

If we can’t figure out how to do that to everyone’s satisfaction, then we need to go back to pure contextual targeting which seems retrograde to many, but which was perfectly adequate for the first 60 years of TV advertising.

There is a lot of debate in the industry about how to make sure that everyone gets counted, and it remains a strong argument for the importance of high-quality panels to correct for the inadequacies of the big data.

Talbot: What are some of the common misconceptions surrounding the measurement of these audiences?

McDonald: Until recently, it often was assumed that the audiences for CTV and OTT were younger and/or more affluent. However, the demographic profile of streamers broadened considerably during Covid so these assumptions aren’t true anymore.

Some media companies have been assuming that households will continue to spend ever higher levels for subscription TV, but the evidence suggests that there are limits.

Talbot: What’s the situation concerning the financial investments required to fund the necessary measurement technologies?

McDonald: These measurement challenges are technically difficult, even if you have a lot of cooperation and interoperability. That means that they will require a lot of capital.

Some of these barriers could be mitigated by more cooperation, agreeing on common technical standards, cooperating on things like universe estimate studies and approaches to ‘truth sets.’

But even if we resolve some of these problems, measurement will still be expensive and competitive. It has been challenging to introduce alternative measurement since most networks can’t afford to pay for more than one currency-grade measurement system at the same time during a transition. However, this can work if the new alternative can be produced more affordably.